WX+b vs XW+b, why different formulas for deep neural networks in theory and implementation?

The problem:

In most neural networks textbooks, neural networks combine features using, y = WX+B or y=W’X+B, however, in tensorflow and theano implementations, neural networks are implemented as y = XW+B. I spent a lot of time investigating the reason for this discrepancy, and came up with this. TL;DR:I think its an implementation issue, computing derivatives for y=XW+B is easier than y=WX+B

Theoretically, W’X+B (or WX+B) is how neural network math is presented in books etc, which is equivalent to XW+B (taking transpose). However, while computing derivatives, the two formulations are not equivalent. Note WX+B is a vector, and we are taking its derivative with respect to a matrix (W). The derivative of a vector with respect to matrix gives a tensor. This tensor is computed by taking each element in y, and taking its derivative with each element in W, and storing that information in i X j X k element of a 3-D tensor, where i is the index of the element in y, and j and k are indices of elements in W. So this tensor results in computing additional matrices that need to be computed and stored. Eq 74 in matrix cookbook, http://www2.imm.dtu.dk/pubdb/views/edoc_download.php/3274/pdf/imm3274.pdf .

Using XW+B, derivative of the function with respect to each element in W is much easier. Its simply X with some elements zero. No need to compute transposes of data and store tensor derivatives, and when ever you want you can compute the derivative by taking a matrix of size weights and putting each element in it equal to the input X with some elements zero.

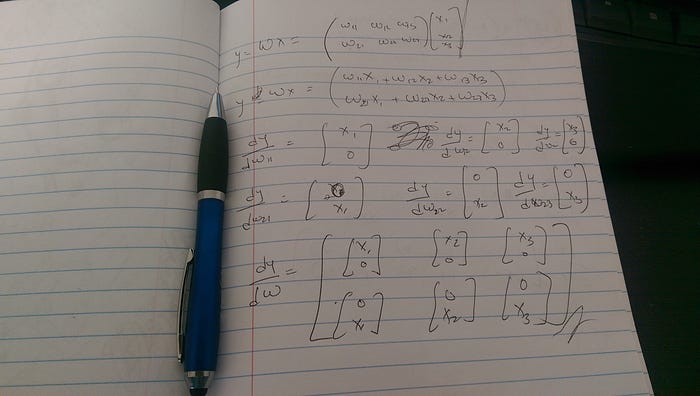

I think this is the main reason to use XW+B vs WX+B. Below is derivation of derivative for y = XW and WX (dropped bias). I think XW implementation is better suited for numpy and python packages, hence the use of XW instead of WX. Please feel free to add more. If you agree or disagree or have any other opinion, please feel free to add more information.

For y = XW

For y = WX